The first true creative suite for local AI.

The incredible response to LLM VibeCheck confirmed what we knew all along: the community is hungry for powerful, no-BS tools to control their own AI. We gave you a way to manage the chaos. Now, we’re going to give you a universe to create in.

LLM VibeCheck was a critical first step. It allowed us to connect with hundreds of users, understand the real-world bottlenecks, and squash the critical bugs in local AI workflows. That vital feedback is the foundation for our next evolution. An updated and more robust version of LLM VibeCheck is coming soon, incorporating everything we’ve learned.

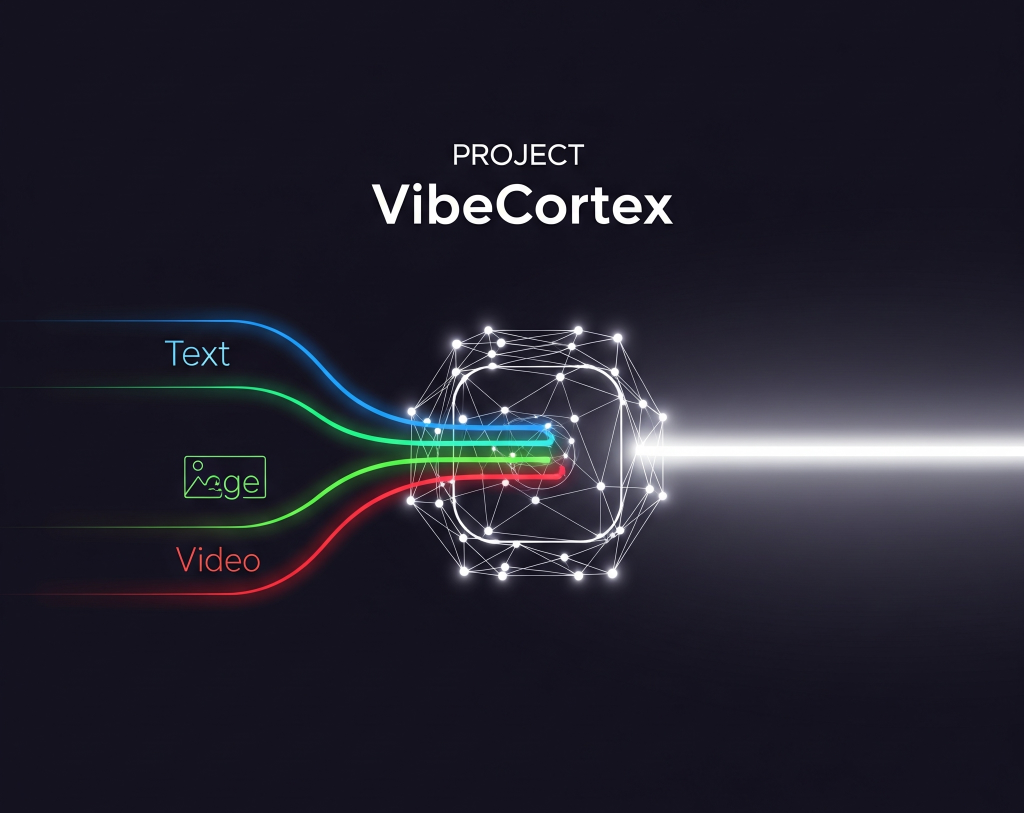

But VibeCheck only ever solved one part of the equation: text. The creative workflow remains fractured, split between a dozen different apps for images and video. This is an unacceptable limit on creativity. We’re here to tear it down.

Today, we are officially announcing our next major undertaking, codenamed Project VibeCortex.

With Project VibeCortex, this is not a question of “what if,” but a statement of what we are building: a single, seamless interface that orchestrates text, image, AND video generation from local models. We are moving beyond simple management and are now architecting a true sandbox for creation, powered by your own hardware.

This is the next horizon. Our work on LLM VibeCheck taught us how to build the car. Now, with Project VibeCortex, we’re building the damn rocket ship.

Join our Telegram channel to follow the journey and witness the buildout.

The vibe continues.

A Quick Reality Check on Hardware

But a rocket ship needs a serious engine. To be fully transparent about what it takes to power this vision, let’s get real about the hardware. This isn’t your average gaming setup. To orchestrate text, image, and video models, you need serious firepower.

We’ve broken it down into two levels: the entry point for enthusiasts, and the powerhouse for a truly seamless creative experience.

Level 1: The Enthusiast’s Rig (Minimum Viable)

(This is where you can start, but expect to manage your models carefully.)

GPU: A single card with 24 GB of VRAM is the absolute baseline. This allows you to run most image and text models concurrently, or one video model at a time.

Examples: NVIDIA RTX 3090 (the budget king) or an RTX 4090.

System RAM: 64 GB. Less than this, and you’ll struggle with loading larger models.

CPU: A modern 8-core CPU (e.g., Ryzen 7 / Core i7).

Storage: 2 TB NVMe SSD. Model files are huge and need to be loaded fast.

The Bottom Line: Yes, you can run it. But you’ll be juggling models in and out of VRAM. It works, but it’s not a “flow” state.

Level 2: The VibeCortex Powerhouse (Recommended)

(This is where the magic happens. No compromises, pure creative flow.)

GPU: A dual-GPU setup with 2x 24 GB of VRAM. This is the game-changer. It allows you to keep a powerful text model (like Llama 3.1 70B) and an image/video model (like Stable Diffusion 3) loaded at the same time.

Examples: 2 x RTX 3090 or 2 x RTX 4090.

System RAM: 128 GB or more. This eliminates all bottlenecks when loading, unloading, and processing data between models.

CPU: A high-end, multi-core CPU (e.g., AMD Threadripper / Core i9).

Storage: 4+ TB of NVMe SSD storage for a massive library of models.

The Bottom Line: This is the rig we’re building for. Instant switching between text, images, and video. No waiting. No compromises. This is the true VibeCortex experience.

Leave a Reply