Alright, let’s talk shop. The world of local Large Language Models is exploding, but let’s be real: for newcomers, it’s a jungle. You’ve got hardware specs, cryptic console commands, and a million models to choose from. We saw a gap—a need for a tool that just works, something with a good vibe that cuts through the noise.

That’s how LLM VibeCheck was born. This is the story of how we took a pile of code and, in a rapid-fire, collaborative sprint, turned it into a polished, user-friendly LLM utility.

Phase 1: The Code Drop & The Vision

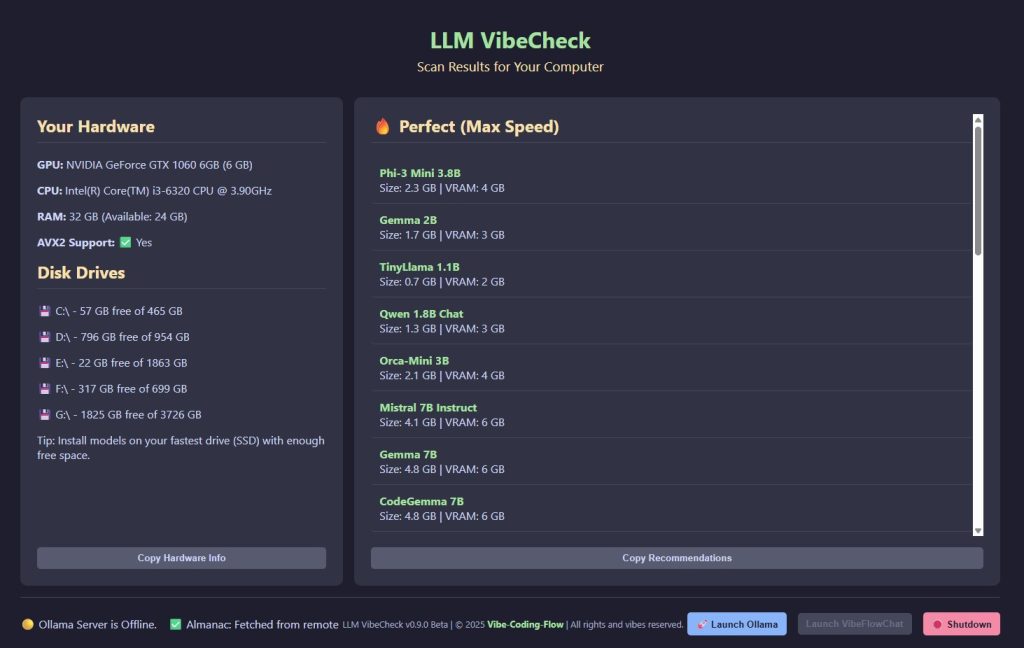

It all started with a solid foundation: a Python project with a Flask backend, some smart hardware-scanning logic in a core_logic.py file, and a basic HTML frontend. It worked, but it was raw. The goal was to take this proof-of-concept and forge it into a bulletproof tool that anyone could use.

Our mission was simple: make local LLMs easy and intuitive. No more guesswork. Just a clean UI that tells you what your rig can handle and gets you chatting with an AI, fast.

Phase 2: Supercharging the UX

A tool without a great user experience is just a script with extra steps. We rolled up our sleeves and started iterating.

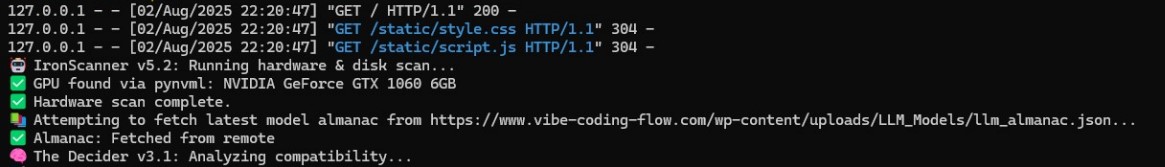

The “Future-Proof” Almanac: The original code had a hardcoded list of LLMs. That’s a ticking time bomb—it’d be outdated in a week. The first major upgrade was ripping that out and replacing it with a dynamic system. The app now fetches a llm_almanac.json file from our website on startup. Now, we can add the hottest new models without users ever needing to update the app. Game-changer.

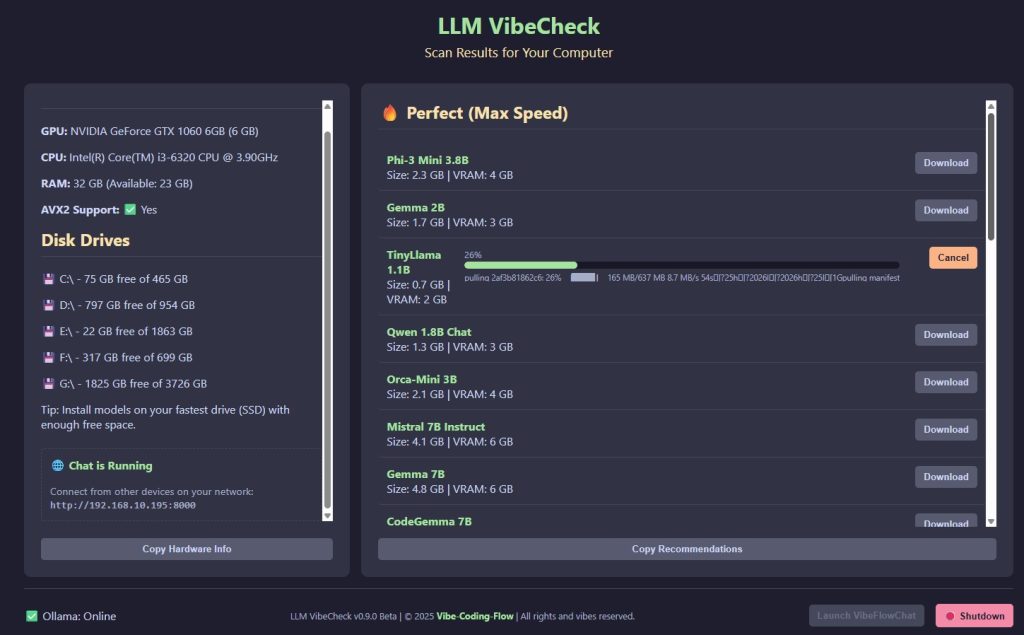

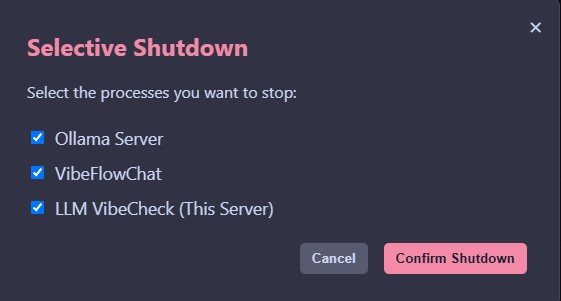

Smarter Buttons, Better Flow: We added quality-of-life features that just make sense. We disabled the “Launch Chat” button after one click to prevent a user from spawning an army of chat windows. We transformed the brutal “Shutdown All” button into a slick, selective shutdown modal, giving users control over what stays and what goes.

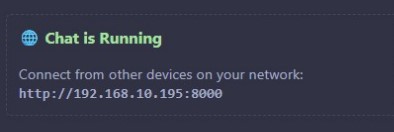

Network Visibility: A killer feature we added was displaying the local network IP address once the chat was running. Suddenly, you could grab your phone and connect to the chat running on your desktop. Simple, but incredibly useful.

Phase 3: The Great Refactor (Performance & Security)

The app was getting feature-rich, but we noticed a problem in the logs: it was re-scanning the user’s hardware and pinging our website every 10 seconds. Inefficient.

We tore the API apart and rebuilt it from the ground up.

Before: One massive API call (/api/get_full_scan) that did everything, all the time.

After: Two lean, specialized calls. /api/get_initial_data runs once at launch to grab static info like hardware specs. /api/get_dynamic_status runs every 10 seconds to get just the stuff that changes, like Ollama’s online status. The result? A massive reduction in redundant processing and network traffic.

Then we locked it down. To prevent unauthorized access and protect our model list from bots, we implemented a server-side validation check. This ensures that only legitimate copies of the VibeCheck app can access the data. After wrestling with some gnarly 500 errors, we got it dialed in. Now, only VibeCheck gets the goods.

Phase 4: Taming the Final Boss – The Windows Executable

Writing the code is one thing. Shipping it is another. Compiling a Python app into a standalone Windows .exe is a notorious minefield, and we hit every classic trap.

The Infinite Loop: Got the classic PyInstaller recursion bug. Fixed with multiprocessing.freeze_support(). Check.

The HTTPS Black Hole: The compiled .exe kept throwing a 500 Internal Server Error when contacting our site. The culprit? Missing SSL certificates. We pulled in the certifi library to bundle the necessary certs, fixing the issue for good.

The Phantom Folders: The final hurdle was the .exe failing to find its own UI—the templates and static folders. We implemented a resource_path function to ensure that no matter where the app runs, it always knows where to find its assets.

The Vibe is Right

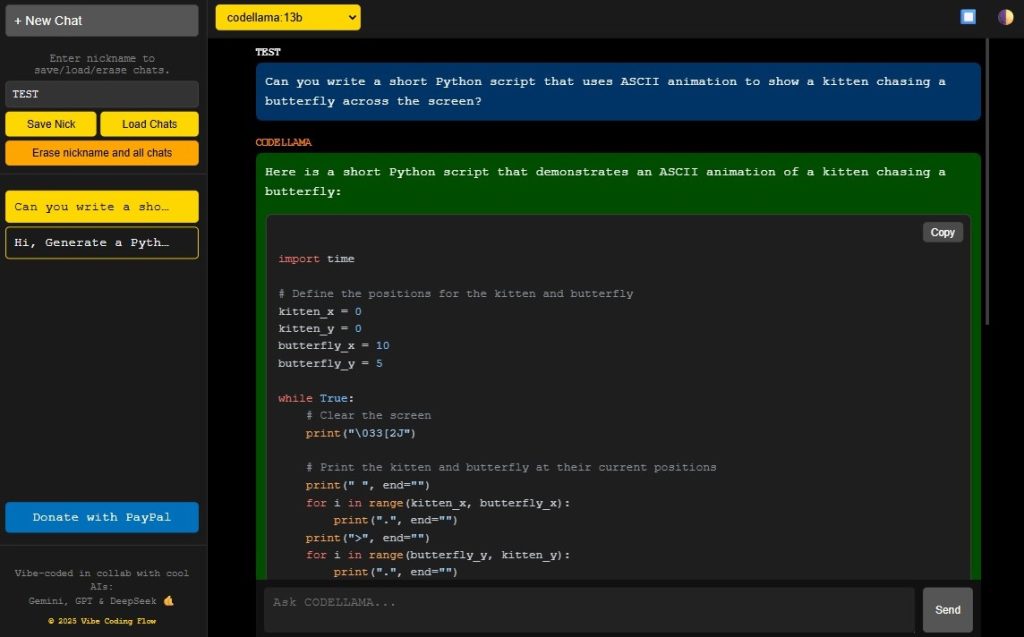

What started as a collection of scripts is now a robust, user-friendly application. We went from a static tool to a dynamic, secure, and efficient LLM manager. Best of all, LLM VibeCheck works hand-in-hand with our VibeFlow Chat app. VibeFlow Chat automatically sees every model you have installed locally, letting you switch between them on the fly from a simple dropdown menu. You can chat with multiple models in the same window, comparing their answers side-by-side without ever breaking your flow. Every feature, every bug fix, and every refactor was about staying true to the original vision: making local AI accessible to everyone.

And that’s the vibe.

Download LLM VibeCheck v 1.0 (Suite)

This project is no longer supported and cannot be downloaded.

Leave a Reply