It all started with a simple idea — create a local AI chat that runs right on your machine.

No clouds, no third-party services. Just you, your hardware, and clean, honest code.

And of course, your AI sidekick, always ready to help debug your brain.

This isn’t another tutorial. It’s a story — about bugs, insights, facepalms and those little wins that make building software worth it.

The Memory-Loss Bug

The first version worked like a charm — UI built, Python backend running, Ollama connected.

We typed:

“Hey, how are you?” — it replied.

Then:

“What did I ask you before?” — it blanked.

“No idea.”

The bot had amnesia. It couldn’t remember previous messages.

At first, we blamed the frontend — must be the JavaScript, right?

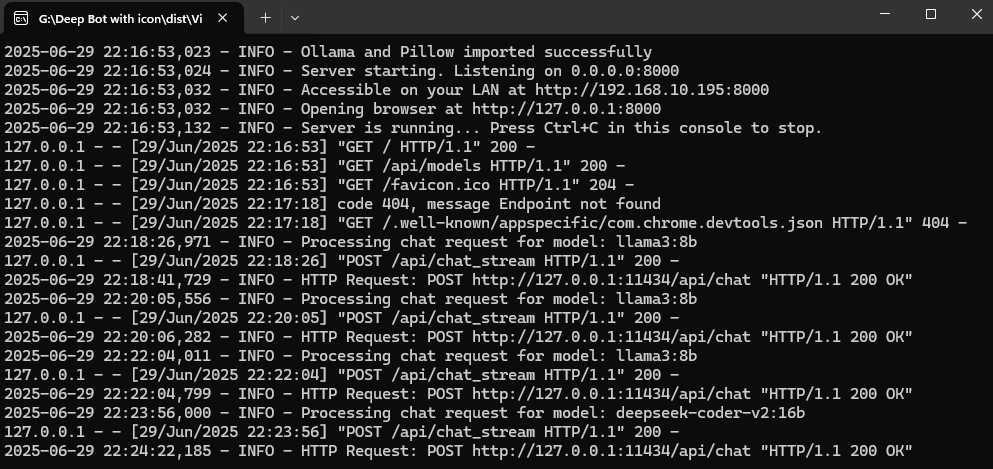

But the logs showed everything was being sent properly. The browser did its job.

The problem? Our server was calling ollama.generate(), which only handles one-shot Q&A.

We switched to ollama.chat(), passed in the full message history — boom, it worked.

🔧 Lesson: Logs don’t lie. When in doubt, trace it out.

The Mystery of the Missing Models

We added new models — Llama 3, DeepSeek — but our dropdown still showed only the old one.

Strange thing: ollama list in terminal worked fine. But the same call from Python returned… nothing.

Turns out, the Python library was outdated and couldn’t parse the response.

So we ditched the wrapper and made a raw HTTP request to Ollama’s API.

Direct approach = instant fix.

🚀 Takeaway: If a tool holds you back — bypass it. Simplicity wins.

The .exe Struggle

Turning a working script into a clean .exe? That’s a whole new game.

-

First, the tray icon crashed the build:

broken data stream. -

Then, the app silently died — no logs, no clues. A GUI lib was conflicting with the system.

-

And of course, the good old

"Address already in use"because of zombie processes hogging the port.

So we stripped it down:

No fluff. No fancy tray icons.

Just a console app with live logs.

Added a license popup on first launch and a proper version.txt for PyInstaller to give the file some polish.

💡 Final insight: Stability often comes from subtraction, not addition.

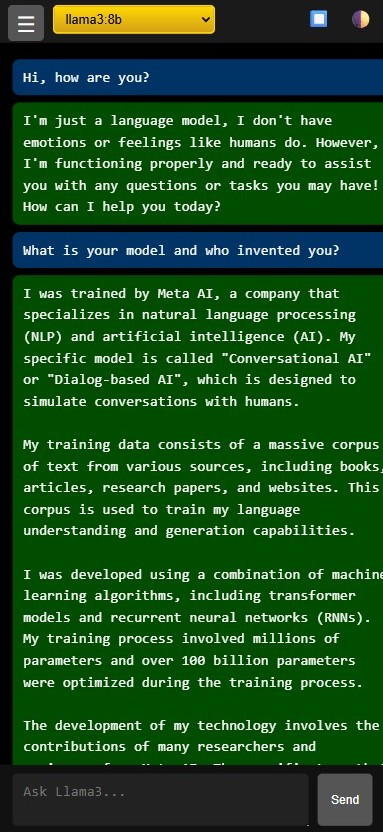

What We Built

VibeFlow Chat 1.0 is:

-

Fully local — no cloud, no tracking

-

Runs on any Windows machine

-

Reachable from other devices on the network

-

Works with any Ollama model

-

Saves chat history and supports multiple themes

-

Compiled into a clean, standalone

.exe

Final Words

This isn’t a story about perfect code. It’s about progress.

About debugging with an AI partner, learning from every crash, and shipping something real.

This is what vibe coding looks like.

Start with the idea. Trust the logs. Break things. Fix them better. Repeat.

Leave a Reply